Last updated on February 22nd, 2024 at 12:29 pm

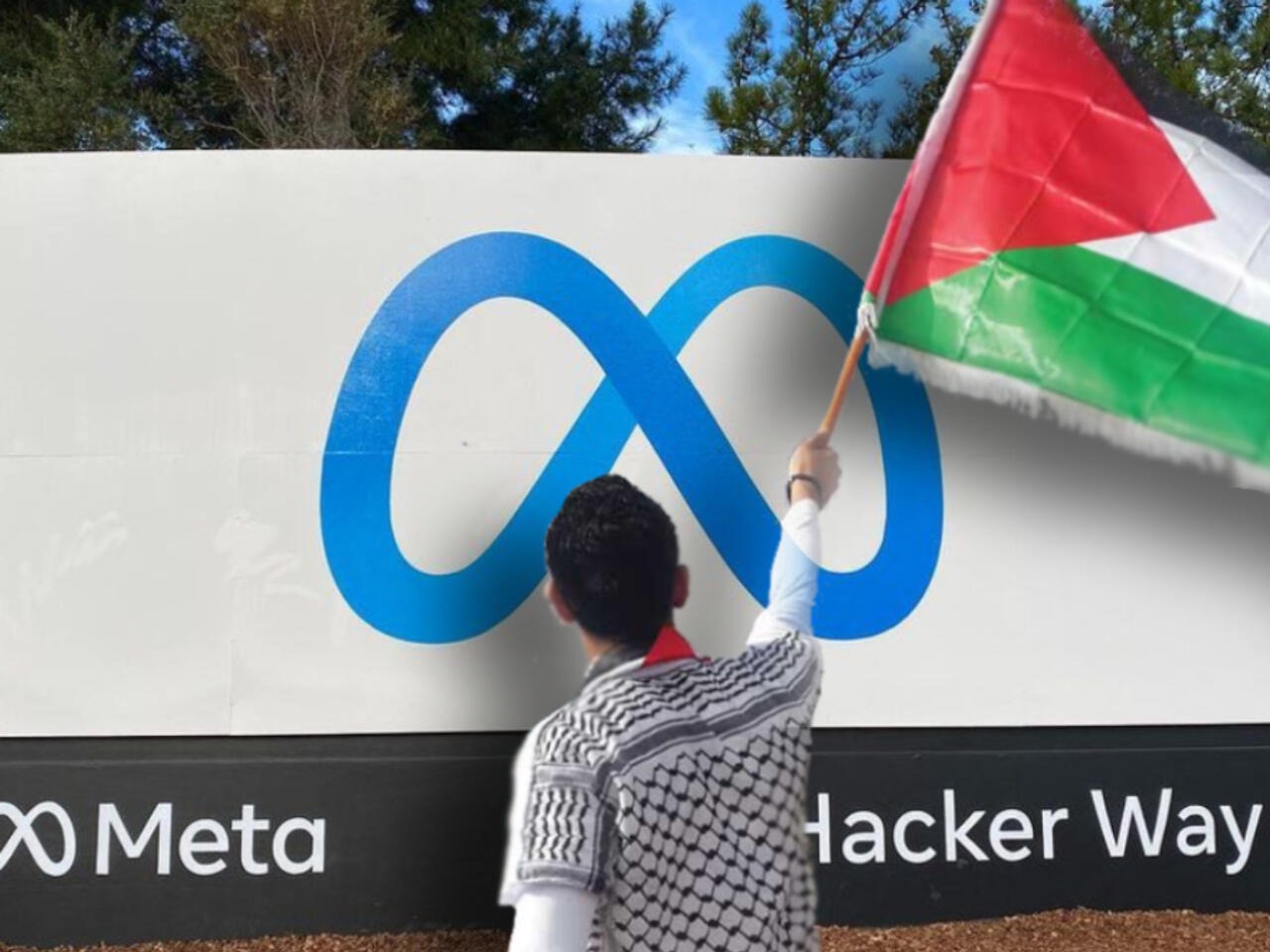

A rights group alleges that Facebook and Instagram frequently engage in ‘six key patterns of undue censorship’ of content supporting Palestine

According to a new report from Human Rights Watch (HRW), Meta has been involved in “systemic and global” censorship of pro-Palestinian content since the outbreak of the Israel-Gaza war on 7 October.

In a critical 51-page report, the organization documented and reviewed over a thousand reported instances of Meta removing content and suspending or permanently banning accounts on Facebook and Instagram. The company exhibited “six key patterns of undue censorship” of content supporting Palestine and Palestinians, including removing posts, stories, and comments; disabling accounts; restricting users’ ability to interact with others’ posts; and “shadow banning,” where the visibility and reach of a person’s content are significantly reduced, according to HRW.

Examples cited include content from over 60 countries, mostly in English, all expressing peaceful support for Palestine in diverse ways. The report also mentioned that even HRW’s own posts seeking examples of online censorship were flagged as spam.

The group stated in the report that censorship of content related to Palestine on Instagram and Facebook is systemic and global, and Meta’s inconsistent enforcement of its policies led to the erroneous removal of Palestine-related content. The report cited “erroneous implementation, overreliance on automated tools to moderate content, and undue government influence over content removals” as the roots of the problem.

In a statement to the Guardian, Meta acknowledged that it makes errors that are “frustrating” for people but stated that “the implication that we deliberately and systemically suppress a particular voice is false. Claiming that 1,000 examples, out of the enormous amount of content posted about the conflict, are proof of ‘systemic censorship’ may make for a good headline, but that doesn’t make the claim any less misleading.”

Meta claimed to be the sole company globally to have publicly disclosed human rights due diligence regarding Israel and Palestine-related issues.

The company’s statement asserts, “This report overlooks the challenges of enforcing our policies worldwide during a rapidly evolving, highly polarized, and intense conflict, which has resulted in an uptick in reported content. Our policies are crafted to enable everyone to express themselves while also ensuring the safety of our platforms.”

For the second time this month, Meta faces scrutiny over allegations of regularly suppressing pro-Palestinian content and voices.

Last week, Elizabeth Warren, Democratic senator for Massachusetts, penned a letter to Meta’s co-founder and CEO, Mark Zuckerberg, seeking information following numerous reports from Instagram users since October that their content was demoted or removed, and their accounts were subjected to shadow banning.

On Tuesday, Meta’s oversight board stated that the company had erred in removing two specific videos of the conflict from Instagram and Facebook. The board deemed the videos important for “informing the world about human suffering on both sides.” One video depicted the aftermath of an airstrike near al-Shifa hospital in Gaza on Instagram, while the other showed a woman being taken hostage during the 7 October attack on Facebook. Both videos were reinstated.

Users of Meta’s platforms have reported what they claim is technological bias in favor of pro-Israel content and against pro-Palestinian posts. For example, Instagram’s translation software translated “Palestinian” followed by the Arabic phrase “Praise be to Allah” to “Palestinian terrorists” in English. Additionally, WhatsApp’s AI, when asked to generate images of Palestinian boys and girls, produced cartoon children with guns, while the images of Israeli children did not include firearms.