Last updated on April 6th, 2024 at 10:36 am

A US company aims to take the lead in artificial intelligence and other industries, having developed a model capable of controlling humanoid robots

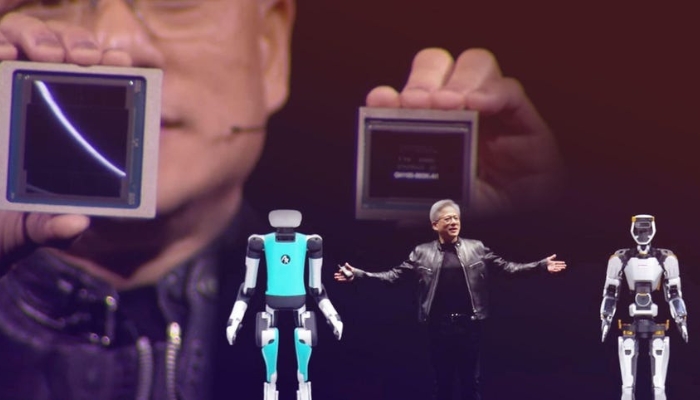

Nvidia has strengthened its position in artificial intelligence by introducing a new “superchip,” a quantum computing service, and a new set of tools aimed at advancing the development of general-purpose humanoid robotics, bringing us closer to the sci-fi dream. Let’s explore Nvidia’s recent developments and their potential implications.

What is Nvidia doing?

The key highlight of the company’s annual developer conference on Monday was the introduction of the “Blackwell” series of AI chips. These chips are designed to power the highly expensive data centers that train cutting-edge AI models like the latest iterations of GPT, Claude, and Gemini.

The Blackwell B200, one of them, is a relatively straightforward upgrade from the company’s existing H100 AI chip. Nvidia stated that training a massive AI model equivalent to the size of GPT-4 currently requires about 8,000 H100 chips and consumes 15 megawatts of power – enough to power around 30,000 typical British homes.

With the new chips, the same training process would only need 2,000 B200s and 4MW of power. This advancement could result in a decrease in electricity consumption by the AI industry, or it could enable the same amount of electricity to be used for training much larger AI models in the near future.

What makes a chip ‘super’?

In addition to the B200, Nvidia introduced another component of the Blackwell series – the GB200 “superchip.” This chip integrates two B200 chips on a single board alongside the company’s Grace CPU. Nvidia claims that this system offers “30x the performance” for the server farms that operate chatbots like Claude or ChatGPT, rather than train them. The system also promises to reduce energy consumption by up to 25 times.

By consolidating everything on the same board, efficiency is improved by reducing the time the chips spend communicating with each other. This allows them to dedicate more of their processing power to performing the calculations necessary for chatbots to function – or, at the very least, talk.

What if I want bigger?

Nvidia, with a market value exceeding $2 trillion (£1.6 trillion), is eager to offer solutions. Consider the company’s GB200 NVL72: a single server rack equipped with 72 B200 chips, connected by nearly two miles of cabling. Need more? The DGX Superpod combines eight of these racks into a single, shipping-container-sized AI data center in a box. Pricing details were not disclosed at the event, but it’s safe to assume that if you have to inquire, it’s beyond your budget. Even the previous generation of chips cost around $100,000 each.

What about my robots?

Project GR00T, seemingly named after Marvel’s arboriform alien character but not explicitly linked to it, is a new foundation model from Nvidia designed for controlling humanoid robots. A foundation model, like GPT-4 for text or StableDiffusion for image generation, serves as the underlying AI model upon which specific use cases can be developed. While they are the most costly aspect of the sector to create, they are also the catalysts for all subsequent innovation, as they can be “fine-tuned” for specific uses in the future.

Nvidia’s foundation model for robots will enable them to “comprehend natural language and imitate movements by observing human actions – rapidly acquiring coordination, dexterity, and other skills necessary to navigate, adapt, and interact with the real world.”

GR00T is paired with another piece of Nvidia technology (and another Marvel reference) called Jetson Thor, a system-on-a-chip designed specifically to serve as a robot’s brain. The ultimate objective is to create an autonomous machine that can be instructed using ordinary human speech to perform general tasks, even those it has not been explicitly trained for.

Quantum?

One of the few exciting sectors that Nvidia has yet to explore is quantum cloud computing. This technology, which remains at the forefront of research, has already been integrated into services from Microsoft and Amazon, and now Nvidia is joining the fray.

However, Nvidia’s cloud service will not be directly linked to a quantum computer. Instead, the service utilizes its AI chips to simulate a quantum computer, enabling researchers to test their theories without the cost of accessing a real quantum computer, which is both rare and expensive. Nvidia plans to offer access to third-party quantum computers through the platform in the future.